Do Machines Disagree like Humans in Ambiguous Visual Question Answering?

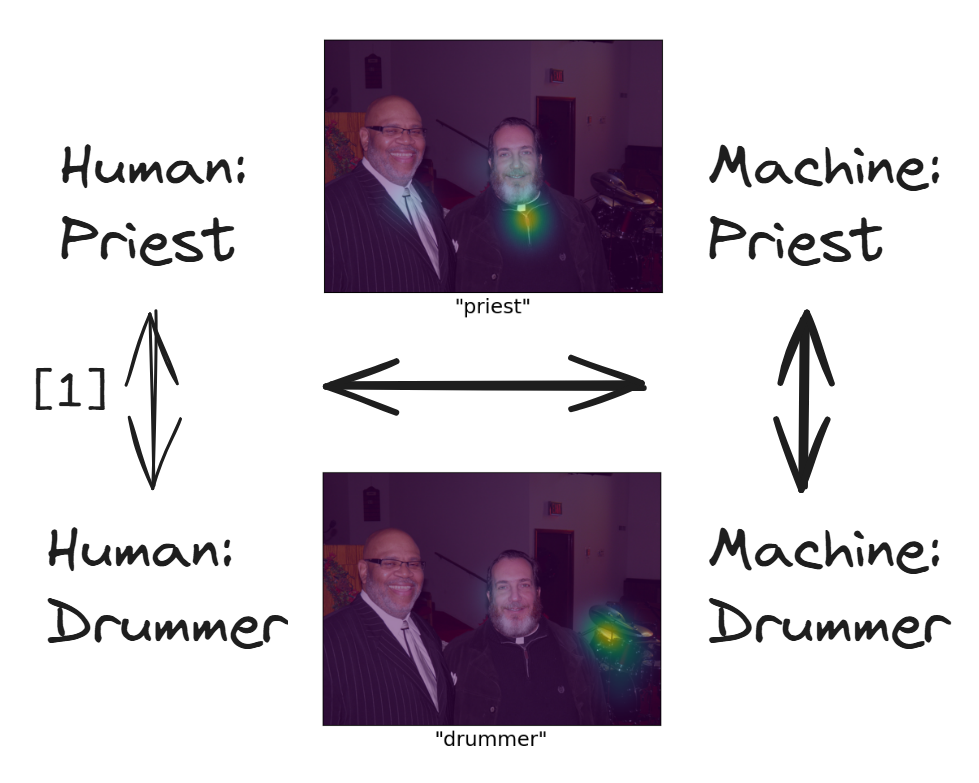

Description: When presented with the same question about an image, humans often give a variety of valid but disagreeing answers indicating differences in their reasoning. In previous work [1], we have found that visual attention maps extracted from eye tracking can provide insight into the sources of such disagreement in Visual Question Answering (VQA). Disagreement also occurs between humans and machines, which is particularly interesting for collaborative AI. It is an open research question whether it is caused by differences in perception, conception or reasoning and more fundamentally whether machines are capable of handling ambiguity in the question or image.

The goal of this project is to take a first step in this direction by comparing neural attention from VQA models to human attention in the presence of ambiguity. Potential research questions are:

Do machines also show different attention patterns when they generate different answers?

Do machines consider multiple plausible answers?

Do machines and humans attend to the same subset of areas when giving similar answers?

The project can leverage and build on previous work and existing implementations to extract both human and machine attention. The main contribution is the experiment design and selection of comparable cases, and analysis of those.

Goals:

- extract neural attention from VQA models (e.g. using AttnLRP [3])

- analyse attention differences between disagreeing model answers

- compare human (MHUG dataset [2]) and model attention under disagreement

Supervisor: Fabian Kögel and Susanne Hindennach

Distribution: 20% literature, 20% experiment design, 20% implementation, 40% analysis and discussion

Requirements: : high motivation, programming skills in Python and PyTorch, experience in data analysis, knowledge of deep learning and model internals

Literature:

[1] Susanne Hindennach, Lei Shi, and Andreas Bulling. 2024. Explaining Disagreement in Visual Question Answering Using Eye Tracking. In 2024 Symposium on Eye Tracking Research and Applications (ETRA ’24).

[2] Ekta Sood, Fabian Kögel, Florian Strohm, Prajit Dhar, and Andreas Bulling. 2021. VQA-MHUG: A Gaze Dataset to Study Multimodal Neural Attention in Visual Question Answering. In Proceedings of the 25th Conference on Computational Natural Language Learning.

[3] Achtibat, R. et al. 2024. AttnLRP: Attention-Aware Layer-Wise Relevance Propagation for Transformers. Proceedings of the 41st International Conference on Machine Learning.