Gaze-Driven Foveation for Efficient VLMs

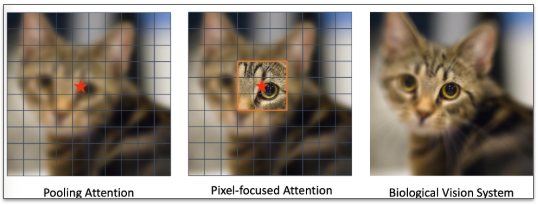

Description: VLMs are inherently computationally intensive, often processing entire high-resolution images even when only a small portion is relevant to the task. This project addresses this challenge by treating human gaze as a real-time signal. Develop a pre-processing pipeline that intercepts a user's VQA request. Instead of feeding the full image to a VLM, this pipeline will construct a specialized "foveated" input, drawing inspiration from the dual-path design of TransNeXt.

Goals:

- To build a training-free framework that uses human gaze data to dramatically reduce the computational cost (FLOPs, token count) of VLM inference

- Develop a pre-processing pipeline that intercepts a user's VQA request

- Evaluate on tasks like VQA (accuracy) and also evaluate efficiency gains

Supervisor: Souptik Majumdar

Distribution: 20% Literature Review, 50% Implementation, 30% Analysis

Requirements: Self-motivated, deep learning knowledge, understanding of Transformers architecture, PyTorch experience, LLM experience optional

Literature:

[1] Shi, D. "TransNext: Robust Foveal Visual Perception for Vision Transformers", arXiv 2024.

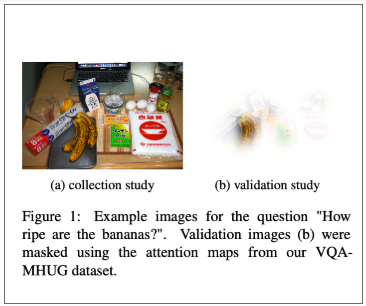

[2] Sood, Ekta, Fabian Kögel, Florian Strohm, Prajit Dhar, and Andreas Bulling. "VQA-MHUG: A gaze dataset to study multimodal neural attention in visual question answering."