Episodic Memory Search by Narrations with Temproal Semantics in Egocentric Videos

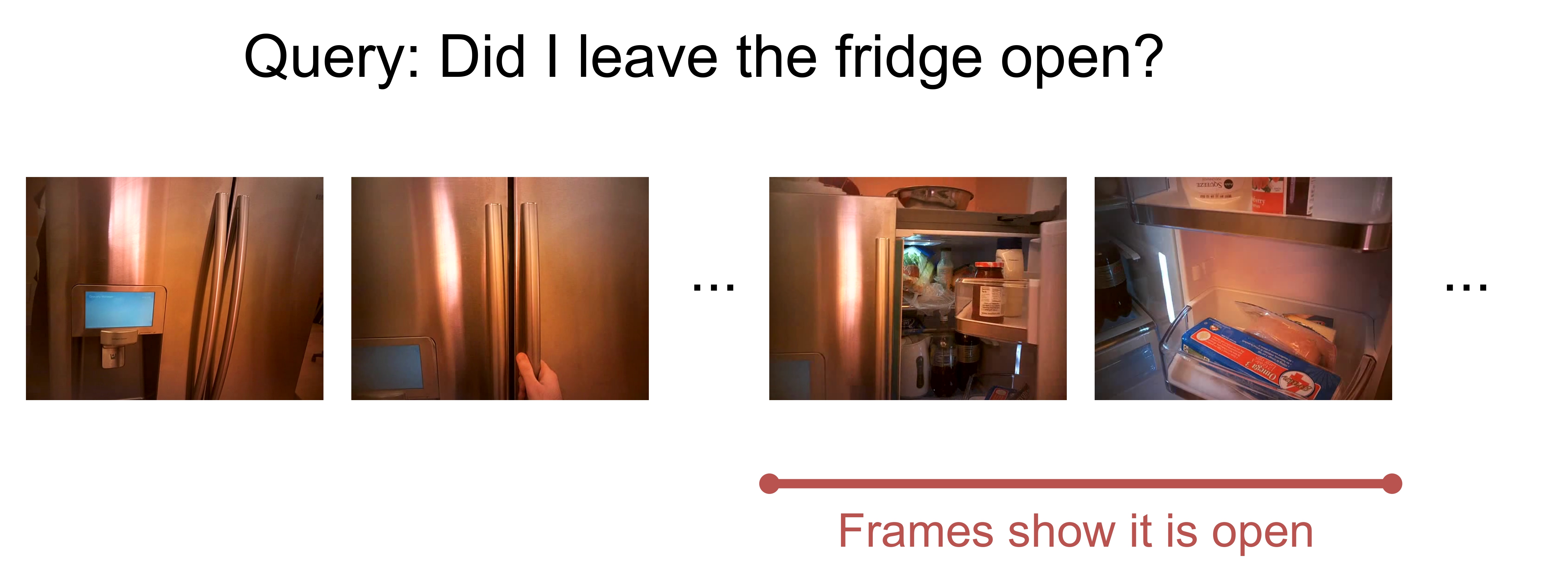

Description: The task of episodic memory is to temporally localise the frames in a long egocentric video to match a given query. For example, the query is 'Did I leave the refrigerator open?', a model takes the query and gives the frames in the long video that answers/corresponds to this query. The main paradigm of episodic memory is using a video encoder and a text encoder to process the video and query, and then using temporal localisation module to retrieve the frames.

One limitation in this paradigm is the high computational cost of video processing, i.e. the video encoder has to iterate over the long video (typically over 10 minutes). The current method generates video segments by using narrations of the video and searching in highly probable segments that match the query. In this project, we will generate accurate and dense narrations to represent the video and create segments in narrations corresponding to videos with temporal dependencies to further reduce the computational cost of the episodic memory task.

Goal:

- Follow [3] and combine the findings of [1,2] to develop a MLP-CNN based model for gaze and head redirection.

- Evaluate the model performance in redirection and other tasks (gaze estimation, face reenactment, etc.)

- If the performance in the redirection task rivals the performances of NeRF-based models, and the runtime/training time decrease significantly, publication in a top tier conference is very likely.

Supervisor: Chuhan Jiao and Lei Shi

Distribution: 20% Literature, 50% Implementation, 30% Evaluation

Requirements:

* Strong programming skills in Python and PyTorch.

* Background and prior experience in multimodal learning (i.e. video and text).

Literature: [1] Ramakrishnan, Santhosh Kumar, Ziad Al-Halah, and Kristen Grauman. "Spotem: Efficient video search for episodic memory." International Conference on Machine Learning. PMLR, 2023.

[2] Ramakrishnan, Santhosh Kumar, Ziad Al-Halah, and Kristen Grauman. "Naq: Leveraging narrations as queries to supervise episodic memory." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2023.

[3] Grauman, Kristen, et al. "Ego4d: Around the world in 3,000 hours of egocentric video." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022.