Cognitive Attention Guided Imitation Learning

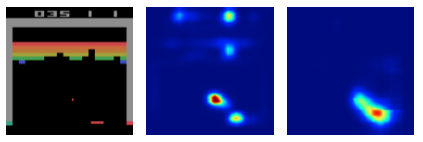

Description: While Reinforcement Learning has celebrated many successes in recent times - for instance by achieving super-human performance at playing Go, Chess or Poker - many challenging benchmarks and problems remain in which human performance is still superior. Inspired by the fact that human gaze is a intention-revealing signal, previous works have explored guiding reinforcement learning with human gaze and could show improvements [1]. In these works gaze often is either not estimated and ground truth data is only used during training [1] or estimated using neural methods [3].

Since such neural methods often require significant compute resources and ground truth human gaze is not always available, we are inspired by works on cognitive models of human visual attention (e.g. EMMA [4]) and propose to combine with current techniques for guiding reinforcement learning agents. This comes with one additional advantage because cognitive models are not trained on any specific gaze dataset we hypothesize that it is easy to transfer this method to new environments. We want to test this idea on the Atari HEAD dataset [2] (for which gaze data exists) and then transfer to other environments in which such gaze data does not exist.

Goal: Fusing cognitive models with attention guided imitation learning for a more parameter efficient and novel solution for solving challanging reinforcement learning problems. If successful this thesis could be featured as a paper at a major conference.

Supervisor: Anna Penzkofer and Constantin Ruhdorfer

Distribution: 20% literature review, 60% implementation, 20% analysis

Requirements: : Good knowledge of deep learning and reinforcement learning, strong programming skills in Python and PyTorch, interest in cognitive modeling, self management skills.

Literature:

[1] Akanksha Saran, Ruohan Zhang, Elaine S. Short, and Scott Niekum. 2021. Efficiently Guiding Imitation Learning Agents with Human Gaze. In Proceedings of the 20th International Conference on Autonomous Agents and MultiAgent Systems (AAMAS '21). International Foundation for Autonomous Agents and Multiagent Systems, Richland, SC, 1109–1117. https://arxiv.org/abs/2002.12500

[2] Ruohan Zhang, Calen Walshe, Zhuode Liu, Lin Guan, Karl S Muller, Jake A Whritner, Luxin Zhang, Mary M Hayhoe, and Dana H Ballard. 2020. Atari-HEAD: Atari Human Eye-Tracking and Demonstration Dataset. AAAI (2020). https://arxiv.org/abs/1903.06754

[3] Ruohan Zhang, Zhuode Liu, Luxin Zhang, Jake A Whritner, Karl S Muller, Mary M Hayhoe, and Dana H Ballard. 2018. Agil: Learning attention from human for visuomotor tasks. In Proceedings of the European Conference on Computer Vision (ECCV). 663–679. https://arxiv.org/abs/1806.03960

[4] D. D. Salvucci. An integrated model of eye movements and visual encoding. Cognitive Systems Research, 1(4):201–220, Feb. 2001. ISSN 1389-0417. doi: 10.1016/S1389-0417(00)00015-2. https://www.sciencedirect.com/science/article/pii/S1389041700000152